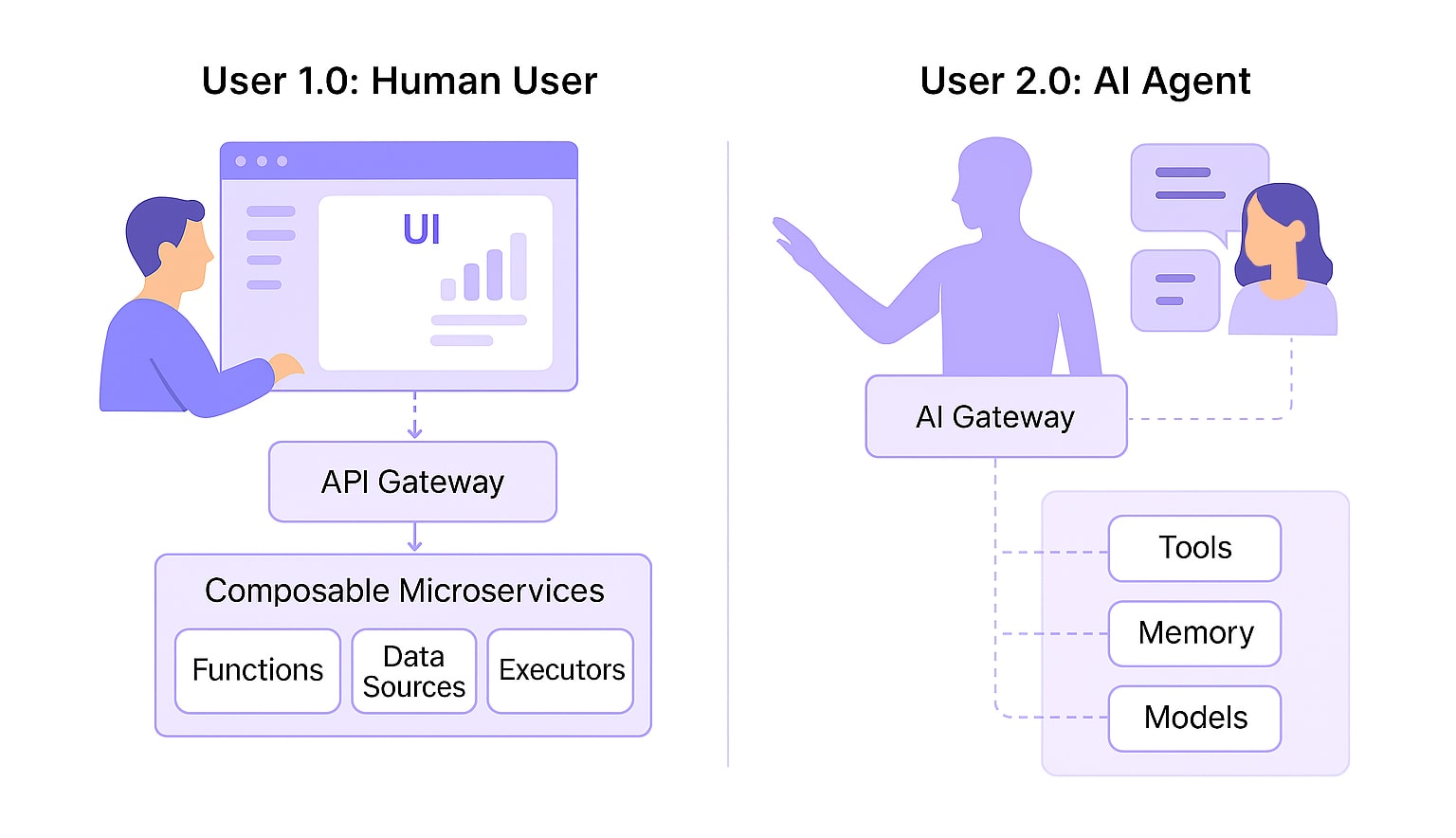

Until recently, software systems were built almost exclusively with one kind of user in mind: humans. Interfaces were crafted to guide human attention. APIs were exposed for human-triggered logic. Business rules were encoded with the assumption that decisions start and end with people.

But that assumption no longer holds.

AI agents are no longer just copilots, they are active users of enterprise systems. They trigger workflows, retrieve information, make decisions, and orchestrate multi-step logic, often faster and more autonomously than any human could. These agents don’t just augment UI—they interface directly with the system, bypassing traditional layers of interaction altogether.

And this shift is more than a UX challenge, it’s an architectural one. To realize the full potential of agentic platforms, we must design systems where AI is not a feature on top but a first-class user.

In this article, we explore how to approach system design from an AI-first perspective: what changes when agents are in control, what technical design principles matter most, and how to ensure orchestrated agent execution is scalable, governable, and enterprise-ready—what a true AI agent development platform must deliver.

Key Takeaways:

- AI agents are emerging as active users of enterprise systems—not just assistants.

- An AI agent development platform must support orchestration, state, and structured logic.

- Agentic platforms enable contextual execution, retry logic, and multi-agent collaboration.

- Human-AI handoffs require explainability, traceability, and override controls.

- Rierino provides the foundation for execution-first AI through orchestration, governance, and developer control.

Why Systems Need to Be Designed for AI as a User

The rise of generative AI agents marks a fundamental shift in the nature of enterprise software. In a human-centric world, systems are designed to be intuitive, forgiving, and sometimes even ambiguous—people can infer, adjust, and recover. But AI agents need determinism. They rely on structured, machine-readable feedback. While they’re improving at interpreting interfaces and inferring intent, agents work best when systems expose logic and state directly.

When the user is an AI and not a person, the system must support execution, not just experience.

This is why designing systems for agents isn’t simply a UX consideration, it’s a deeper architectural responsibility.

In our previous article, Building Empowered AI Agents for Enterprise, we explored how AI agents are becoming autonomous actors capable of making decisions, coordinating logic, and driving multi-step flows. But to truly empower those agents, the systems they interact with must be just as capable—structured enough to support autonomy, resilient enough to handle orchestration, and transparent enough to allow traceability.

So what does that actually mean for enterprise systems?

- They need to surface capabilities as well-defined, reliable interfaces, not hide them behind human-centric screens or workflows.

- Processes should be modular, traceable, and recoverable, allowing agents to retry, resume, or roll back as needed without starting over or requiring manual intervention.

- Relevant business context must be exposed in a way agents can understand, enabling them to make decisions based on current state, history, and predefined rules.

In short, if a human user expects helpful prompts and visual cues, an AI agent needs structure, clarity, and control.

Most legacy systems weren’t built with this in mind. Automation has often been treated as an add-on—something handled by scripts or bots at the edge, rather than at the heart of the system. This leads to brittle experiences where AI agents are forced to “work around” systems instead of working with them.

This is exactly why the concept of an agentic platform is emerging.

An agentic platform recognizes AI as a core user of the system. It’s not just about plugging in an LLM, it’s about enabling long-running orchestration, event-driven execution, and safe, governed autonomy. It creates the foundation for agents to interact meaningfully with systems—not just initiate tasks but reason about them, adapt to outcomes, and even coordinate with other agents when necessary.

At Rierino, this perspective is built into how we’ve approached orchestration, execution, and system design. By empowering agents through a structured, observable, and context-aware environment, we’re enabling AI to operate not just more intelligently but more effectively and responsibly in real-world enterprise scenarios.

What It Means for a System to Be Agent-Friendly

Designing systems for AI as a user requires more than exposing a few APIs or adding an LLM wrapper. To support agentic execution, systems must become structured, predictable, and programmable in a way that aligns with how agents operate—not how humans think.

An agent-friendly system doesn’t rely on visual cues or context switching. It delivers logic and feedback in well-defined, machine-consumable formats. It treats interaction as orchestration, not navigation.

Here are the core characteristics that define agent-friendly design:

- Deterministic interfaces: Agents rely on consistency. If the same input doesn’t produce the same output, or if error handling is unpredictable, agents can’t reason through flows effectively. Every outcome should be inspectable, repeatable, and clearly defined.

- Idempotent actions and retry-safe logic: In distributed agent execution, failures happen. While models are becoming more resilient to variable conditions, issues such as network blips, timeouts, and partial updates can still disrupt flows. Agent-compatible systems must support safe retries without causing duplication or corruption of state.

- Structured feedback over free text: While LLMs can interpret natural language, system responses to agents should favor structured formats such as status codes, typed outputs, and contextual metadata. This enables chaining of logic and conditional branching without brittle prompt engineering.

- Stable contracts, not implicit behavior: Human users can infer undocumented logic. Agents are improving at generalizing from patterns, but still rely on explicit, consistent rules to operate reliably. If a business rule exists, it needs to be expressed in code or configuration, not hidden behind procedural quirks.

- Separation of interface and execution: Systems designed for agents expose capabilities through modular services rather than UI-embedded logic. This decoupling ensures that agents can orchestrate tasks without being tied to human-oriented flows.

Most traditional enterprise systems were not built with these principles in mind. They emphasize user experience over agent usability, often coupling backend logic with UI flows, hardcoding business rules, or relying on manual checkpoints.

There’s nothing wrong with enhancing UI using generative AI—features like smart suggestions, autofill, and dynamic content are useful improvements for human users. We explored several of these in 4 Gen AI Use Cases in User Interfaces.

But designing for agents goes beyond the interface. It requires systems that can be reasoned about, navigated, and executed autonomously by AI.

That’s why agent orchestration platforms must do more than connect to systems—they must compensate for their limitations, enable observability, and wrap legacy endpoints in reliable, composable flows.

This is where platforms like Rierino come in. By abstracting backend complexity and offering saga-based orchestration with state management, timeouts, and error recovery, we make it possible for AI agents to interact with even imperfect systems in a safe, scalable way.

The goal isn’t to rewrite everything for agents from scratch. It’s to design an execution layer that makes those interactions predictable, governable, and agent-compatible—even across fragmented architectures.

Core Design Principles for Agentic Platforms

If traditional systems are optimized for human usability, agentic platforms are optimized for machine reasoning and orchestration. These platforms treat AI agents as first-class participants in system interactions, requiring a shift in how execution, logic, and system state are structured and exposed.

Here are five foundational principles that define agentic platform architecture:

1. Idempotency and State Awareness:

AI agents often operate in multi-step workflows where the same action may be retried due to timeouts, interruptions, or dynamic re-evaluation. An agentic platform must treat idempotency not as a best practice, but as a baseline requirement. Every action should have a predictable effect, even when triggered multiple times, and system state must be queryable and consistent to support decision checkpoints along the way.

2. Observable Execution and Decision Tracing

Agents need more than success/failure flags. They need introspection. What decision was made, based on which input, and what changed as a result? Agentic platforms must provide traceable execution logs, event snapshots, and decision metadata. This not only enables smarter agent behavior over time but also gives human overseers the transparency they need to audit, debug, or intervene when required.

3. Composable Orchestration Logic

Complex agents don’t execute single API calls, they orchestrate tasks, conditionals, fallbacks, and interactions across multiple systems. That orchestration must be composable: built from modular, reusable logic units that can be chained, nested, or overridden without reinventing the wheel each time. Rierino’s saga flow tooling enables this out of the box, making long-running, conditional, and compensating workflows straightforward to design and govern.

4. Contextual Inputs and Bounded Decision-Making

Agents act based on the context they are given: current state, system responses, user preferences, and historical data. Too little context, and they underperform. Too much, and they become brittle or unpredictable. Agentic platforms must support bounded context delivery, with inputs scoped and filtered based on the decision being made. This ensures agents operate efficiently, ethically, and within guardrails.

5. Stability and Backward Compatibility

Agentic execution assumes long-term consistency. Interfaces, behavior, and response contracts must be stable over time, even as underlying systems evolve. While agents are becoming more adaptable, they still depend on consistent interfaces and predictable behavior to operate reliably over time. This makes versioning, compatibility layers, and dependency tracking critical to sustainable agent development.

These principles aren’t theoretical, they’re architectural commitments. They form the foundation of a scalable AI agent development environment that supports resilient logic orchestration and governed autonomy. At Rierino, they’re reflected in how we’ve built our orchestration engine and AI Agent Builder, which exposes system logic, manages state, and enables execution flows that are both powerful and governable.

This is what sets an agentic platform apart: not just supporting AI agents, but designing the system around them from the start.

Agent Execution Through Contextual Decision-Making

Decision-making is at the heart of any agent’s behavior. Whether it’s fulfilling an order, adjusting product configurations, processing returns, or coordinating between inventory and customer service systems, AI agents don’t just call endpoints, they evaluate, choose, and act based on context.

But, in enterprise environments, context is rarely static. It shifts with user inputs, system states, business rules, and time-based conditions. That’s why an agentic platform must support dynamic, contextual execution, empowering agents to reason over complex inputs, react to events, and take appropriate action at every step. We explored how this comes to life in practice in Introducing Rierino AI Agent Builder, where execution logic, orchestration, and decision context are all unified in a developer-friendly environment.

Here are the key capabilities that enable contextual execution within agent orchestration:

Stateful Workflows

Stateless APIs are fine for one-off tasks. But for processes like onboarding a vendor, assembling a custom product bundle, or managing a B2B procurement cycle, agents need to track progress across multiple steps and sessions.

For example, an AI agent managing a new marketplace seller onboarding flow might:

- Validate tax IDs in step one

- Await external verification responses for KYC in step two

- Resume once verification completes, even hours later, and proceed to listing product SKUs

Rierino enables this kind of persistent, state-aware orchestration natively, allowing agents to pause, resume, or defer actions without losing execution context.

Context Propagation

Agents don’t need all data, they need the right data at the right time. Imagine an agent assisting a customer in a return flow. It needs to know:

- The order status (delivered or in-transit?)

- Return eligibility rules (based on item category and days since delivery)

- Inventory disposition (restockable or not?)

By propagating only this scoped context, the agent can make an informed decision on whether to approve an instant refund, trigger a manual review, or suggest store credit. Rierino’s flow engine manages this context propagation securely and predictably across each orchestration step.

Conditional Logic and Branching

Enterprise logic is rarely linear. For example, a fulfillment agent may:

- Route an order to the nearest warehouse if stock is available

- Otherwise trigger a backorder with estimated delivery calculation

- Or escalate to a customer support agent if no stock is expected for more than 7 days

This type of branching can’t be hardcoded or buried in brittle decision trees. Rierino provides low-code tools for building conditional paths directly into orchestration flows so that agents can adapt in real time based on the evolving state.

Retry, Compensation, and Timeout Handling

Even the best systems fail occasionally. APIs time out, third-party services drop connections, or internal processes take too long.

Let’s say an agent attempts to process a refund through a payment gateway. If the API call fails:

- It should retry with exponential backoff

- If the failure persists, it might log the error, alert an operations agent, and trigger a fallback refund method (e.g. store credit)

- If compensation is required (e.g. reversing an inventory adjustment), that action should be triggered reliably

Rierino’s orchestration engine handles retries, fallbacks, and compensating logic, natively ensuring that agents act responsibly even when systems don’t behave perfectly.

Bounded, Dynamic Decision Context

Agents act based on the context they are given, but that context must be bounded and purposeful. Too little, and decisions are underinformed. Too much, and the agent becomes brittle, confused, or inefficient.

A customer service agent, for example, should receive:

- The customer’s most recent support tickets

- Any escalations tied to the current order

- SLA deadlines for resolution based on the customer tier

Not the entire order history, full product catalog, or irrelevant ticket threads. This is where agent orchestration becomes more than just a sequence of API calls. It becomes a dynamic reasoning layer where agents can make trade-offs, adjust to evolving inputs, and operate with autonomy without sacrificing business logic or governance.

This execution-first approach to AI moves beyond copilots and into full-scale automation, where systems are designed around action, not just assistance. At Rierino, contextual orchestration is not an afterthought. It’s a core design principle — one that allows agents to operate with precision, resilience, and purpose in real-world enterprise environments.

Orchestrating Multi-Agent Systems: Beyond a Single AI

As AI agents take on more responsibilities in enterprise workflows, the need for coordination across multiple agents becomes increasingly relevant. Single-agent execution can handle isolated tasks. But real-world processes often require multiple specialized agents working together — each contributing to a larger goal while maintaining their own scope and logic.

This is where agentic platforms begin to move beyond automation and into true collaborative orchestration.

Role-Based Agent Delegation

Just as human teams have specialized roles, agents can be designed to take on distinct functions:

- A planner agent breaks down high-level goals into executable steps.

- An executor agent handles transactional tasks like creating records or processing payments.

- A verifier agent ensures outcomes meet required business rules before finalizing.

- A communicator agent might interface with external APIs or generate summaries for humans.

Take a product launch process in a commerce platform:

- The planner agent defines tasks like pricing, asset generation, and inventory mapping.

- Executor agents handle PIM updates, image uploads, and channel configuration.

- A verifier agent checks launch-readiness criteria.

- If any issues are found, the planner is notified to reassign or adjust.

Each agent has a bounded scope, and their combined flow is orchestrated through a central logic layer that defines who acts, when, and under what conditions.

Choreography vs. Centralized Orchestration

Agent collaboration can follow two main models:

- Orchestration, where an AI Gateway manages the flow, assigns tasks, and monitors outcomes (ideal for structured enterprise logic).

- Choreography, where agents operate more independently and signal each other via events or shared state (suited to loosely coupled domains).

Rierino supports both, allowing developers to design orchestrated flows with full control while enabling asynchronous event-based triggers where needed. For example:

- In a returns process, a verifier agent may reject an auto-refund, triggering an escalation to a human.

- In a personalization flow, a communicator agent may subscribe to events from a user behavior tracker and adjust its recommendations accordingly.

This flexibility enables real-world orchestration strategies without forcing brittle, monolithic logic.

Inter-Agent Governance and Control

Coordination doesn’t mean chaos. Multi-agent systems introduce new governance challenges:

- What happens if two agents issue conflicting updates?

- Who resolves deadlocks or handles conflicting decisions?

- How do you log, monitor, and trace multi-agent behavior at scale?

Rierino addresses this through governance-first orchestration. Every agent interaction is observable, logged, and replayable. You can define escalation paths, priority rules, and compensation logic across agent boundaries, ensuring that the system stays deterministic and trustworthy even under complex interactions.

Agentic platforms must make it easy to build collaborative logic without losing control. That means giving developers the power to design how agents interact but also the observability, safety nets, and override mechanisms needed to keep those interactions productive and aligned.

Multi-agent orchestration isn’t about increasing complexity for its own sake. It’s about enabling scalable, domain-specific autonomy while staying accountable to business rules, system design, and human oversight.

Human-AI Handoff: Building Systems for Shared Control

As AI agents become more capable, one of the most important design considerations is knowing when and how to bring humans into the loop. Full autonomy may be the goal in some cases, but in most enterprise workflows, shared control is both necessary and desirable.

A well-designed agentic platform should make it seamless to alternate between automated execution and human intervention without breaking flow, losing state, or compromising governance.

Interruptible Flows and Escalation Paths

Agents should be able to detect when human input is required and trigger a pause, reroute, or escalation as needed. For example:

- In a refund process, an agent may auto-approve based on policy but escalate to a human if the transaction exceeds a risk threshold.

- In a product enrichment flow, an agent might generate placeholder descriptions and flag any low-confidence fields for human review before publishing.

These moments shouldn’t require ad hoc overrides. They should be built into the orchestration layer as configurable conditions with well-defined transitions.

Explainability and Traceability

When agents act on behalf of users, or in place of them, their actions must be explainable. That includes:

- Why a certain decision was made

- What input data or rules were used

- What alternatives were considered (if any)

- How the outcome aligned with expected business logic

Rierino captures this as part of its execution trace and orchestration history. Every decision point can be reviewed, audited, and used to improve future logic or to clarify agent behavior when things don’t go as planned.

Human Feedback as a First-Class Signal

Humans shouldn't just override agents, they should help train and evolve them. Agentic platforms should treat human input not as an interruption, but as an opportunity to improve. Examples include:

- Overriding an incorrect classification or decision, with the reason captured for future tuning

- Providing corrections or annotations in multi-turn interactions

- Setting new thresholds or parameters based on context the agent couldn’t infer

This feedback loop is essential not only for trust, but for long-term adaptability. Rierino allows you to integrate feedback into decision logic, influence branching conditions, or prompt retraining processes — all without breaking the orchestration model.

User Interfaces That Complement Agents, Not Compete with Them

When humans and agents share responsibility, the interface must reflect that partnership. That means:

- Surfacing agent-suggested actions in human-facing UIs

- Showing execution progress and letting users step in mid-flow

- Offering approval, rejection, or reconfiguration options that directly influence the orchestration

In a product launch scenario, for instance, a human product manager might be presented with a generated launch checklist, auto-filled data from PIM, and agent-suggested channel recommendations, but still have the final say before triggering go-live.

In an agentic platform, humans and AI aren’t competing for control. They’re collaborators, each with different strengths, working toward a shared outcome. Systems that embrace this shared control model can deliver the best of both worlds: automation at scale, with accountability where it matters.

Designing Systems for AI as the Next User

The emergence of AI agents as users marks a turning point in how enterprise systems are architected and defines the blueprint for any modern AI agent development platform. It’s not just about adding AI to existing workflows — it’s about designing systems that can support AI as an active participant in logic, execution, and decision-making.

This shift calls for a new kind of architecture: one where logic is orchestrated rather than hardcoded, where state and context are treated as first-class citizens, and where both humans and agents can operate collaboratively.

Agentic platforms embrace this model by enabling:

- Composable orchestration that supports multi-step, stateful execution

- Dynamic decision-making based on real-time context

- Multi-agent coordination across specialized roles

- Shared control between humans and AI, with explainability and traceability built in

Here are a few guiding questions to start evaluating if your systems are agent-ready:

- Can your backend logic be exposed through structured, reliable interfaces or tooling?

- Is your execution flow decoupled from UI?

- Do your systems support retries, compensations, and context-driven branching?

- Can decisions be tracked, explained, and overridden where necessary?

- Are human-AI handoffs part of the design, or afterthoughts?

Building for AI as a user doesn’t mean rewriting everything. It means rethinking how your systems are structured and preparing them to work with users who don’t read screens or click buttons, but reason, plan, and act. With the right orchestration foundation, AI agents don’t just automate tasks, they become partners in executing complex, evolving business logic. And that’s where the real transformation begins.

Ready to accelerate your AI agent development? Get in touch with our experts today to discover how Rierino can help you build intelligent solutions tailored to your business needs.

RELATED RESOURCES

Check out more of our related insights and news.

FAQs

Your top questions, answered. Need more details?

Our team is always here to help.