Generative AI has moved well past the exploration stage, or as McKinsey puts it, "the honeymoon phase is over". For technology leaders, the conversation is no longer about whether AI will impact the business; it’s about how to scale it effectively and sustainably.

In that context, the early excitement of prototypes and pilot use cases is giving way to more complex realities from integration challenges, to rising costs, governance pressures, and the lack of a repeatable foundation.

What’s becoming clear is that scaling Gen AI isn’t just a matter of building more use cases. It requires a fundamental shift, from fragmented experiments to cohesive execution. From tool-first thinking to platform thinking.

This article explores how enterprise AI initiatives move beyond the pilot trap by focusing on architecture, orchestration, and scale-ready design. Specifically, it will cover:

- What scaling Gen AI really means beyond volume and model access

- Why many initiatives stall before reaching production value

- The core technical capabilities required for orchestration at scale

- Organizational enablers like governance, talent alignment, and process structure

- How execution-first platforms like Rierino support sustainable, system-wide Gen AI adoption

What Does Scaling Gen AI Actually Mean for Enterprises?

Scaling generative AI is often misinterpreted as simply deploying more models or expanding access to prompt interfaces. But true scale requires more than reach, it requires integration. It’s about how deeply AI capabilities are embedded into the organization’s operational backbone.

That means scaling across multiple dimensions:

- Use cases: from marketing and service to finance, supply chain, and IT

- Users: including developers, business users, and autonomous AI agents

- Channels: spanning APIs, chat interfaces, backend workflows, and data streams

- Contexts: across geographies, customer types, compliance zones, and business scenarios

When Gen AI scales effectively, it delivers more than just output:

- Accelerated time-to-market, by reusing core logic and orchestration patterns

- Lower marginal costs per use case, thanks to a shared platform and modular architecture

- Consistent governance, as logic, permissions, and monitoring are applied system-wide

Crucially, AI at scale becomes part of the execution fabric.

It no longer sits on the periphery generating content. Instead, it actively participates in workflows, makes decisions based on live context, and coordinates with other systems and agents.

This perspective also shapes how low-code development is evolving. As we outlined in our low-code use cases for CTOs, the focus is shifting from building user interfaces to orchestrating backend logic, automating distributed decisions, and enabling collaboration between human and AI actors.

From this standpoint, platforms that support AI not just as an add-on, but as an orchestrated execution layer, are better positioned to help enterprises move beyond pilots toward real, repeatable outcomes.

Why Enterprise Gen AI Pilots Fail to Scale

There’s no shortage of enthusiasm around generative AI, but scaling remains elusive for many enterprises. While experimentation is widespread, the leap from pilot to production often stalls mainly due to structural constraints.

According to recent research:

- Fewer than 30% of Gen AI experiments have been moved into production

- 41% of enterprises struggle to define or measure their impact

- And over 50% are avoiding certain use cases altogether due to data-related limitations

These findings reflect what many technology leaders already sense: Gen AI isn’t failing because of a lack of interest. It’s failing because the architecture surrounding it hasn’t kept up. And, at the core of this problem are four recurring blockers:

1. Disconnected Systems Block Real-Time Execution

Most Gen AI experiments start in isolation, and are typically built on disconnected infrastructure, such as sandboxes spun up for specific use cases or tied to one-off data snapshots. While that’s a valid way to test feasibility, without seamless integration into core systems, it quickly becomes a liability when moving toward production.

We’ve seen content generation tools built without access to up-to-date product catalogs or customer profiles, leading to inaccurate or redundant output. In internal automation pilots, agents are often designed to interact with a service layer that doesn’t reflect real-world latency, error handling, or security rules, breaking when integrated with live systems. In support functions, LLMs might summarize knowledge base articles well in test environments but fail when connected to siloed ticketing data or stale customer histories.

Without real-time, bi-directional access to core systems, AI remains disconnected from the very workflows it’s meant to augment.

2. The Absence of Orchestration Leads to Inconsistent Behavior

In many organizations, different teams pursue Gen AI independently, often with entirely different assumptions about how agents should behave. One team may build prompt-based flows in a chatbot, while another schedules offline AI jobs, and a third uses RPA scripts to trigger AI tasks. Without a shared orchestration layer, these agents can’t coordinate or escalate across domains.

For example, a finance team might use an AI agent to flag unusual expenses based on a certain cost threshold, while the procurement team builds a separate tool to validate vendor invoices using different rules. Since these tools aren’t orchestrated together, the same invoice could be flagged by one team and approved by another, with no shared logic to resolve the discrepancy or explain the outcome. Or an AI-generated recommendation in a customer portal doesn’t align with what a service agent sees in the back office because each uses a different context model.

These discrepancies create confusion, redundant logic, and a lack of trust, both for users and internal stakeholders, especially when agents appear to operate with different rules across systems.

3. Custom Integration Work Creates a Scalability Bottleneck

When every new use case requires its own integration layer, connector logic, and exception handling, it becomes clear that the pilot wasn’t built with reuse in mind. We’ve seen teams build custom pipelines just to extract customer interaction data for summarization, or hardcode workflows that only work with a single language or country-specific data format.

Scaling that kind of setup is painful. A marketing automation team may struggle to localize AI-driven campaign tools across regions because the underlying data sources, formatting, and fallback logic are hardwired. A customer operations pilot that works with one CRM instance often requires weeks of rework to function with another business unit’s setup, simply because there was no abstraction or orchestration strategy in place.

The result is a growing portfolio of AI solutions that are brittle, expensive to maintain, and almost impossible to scale efficiently.

4. Lack of Governance Undermines Trust and Control

In the rush to prove value, governance is often deferred, only to become a blocker later. We’ve seen pilots run without basic access control or audit trails, where it’s unclear who modified a prompt or why an agent made a particular decision. Once legal, risk, or compliance teams are brought in, everything slows or halts altogether.

This becomes particularly problematic in regulated industries. In financial services, for instance, we’ve seen agents tasked with summarizing account activity or automating client onboarding fail governance reviews because they couldn’t log decision steps or verify data sources. In healthcare, even a well-functioning clinical note summarizer can be sidelined if it can’t demonstrate that sensitive fields are masked or stored correctly.

Even outside high-risk domains, trust erodes quickly if users can’t understand how outputs are generated or updated, especially when models or prompts evolve without documentation.

These challenges often remain hidden during early experimentation. But as use cases grow, their cumulative impact becomes clear: scaling without structure leads to fragility. Retrofitting governance or orchestration after the fact is not only complex, it often requires starting over.

Building the Right Technology Foundation for Scaling Gen AI

To scale generative AI successfully, enterprises need more than model access or prompt engineering expertise. They need the architectural foundations to orchestrate logic, connect systems, and operationalize agent behavior securely, consistently, and at scale.

At the center of this foundation is a shift in thinking: from “experiment and deploy” to “design and orchestrate”. In practice, that means building systems that treat Gen AI as part of the execution layer, and not just a front-end enhancement.

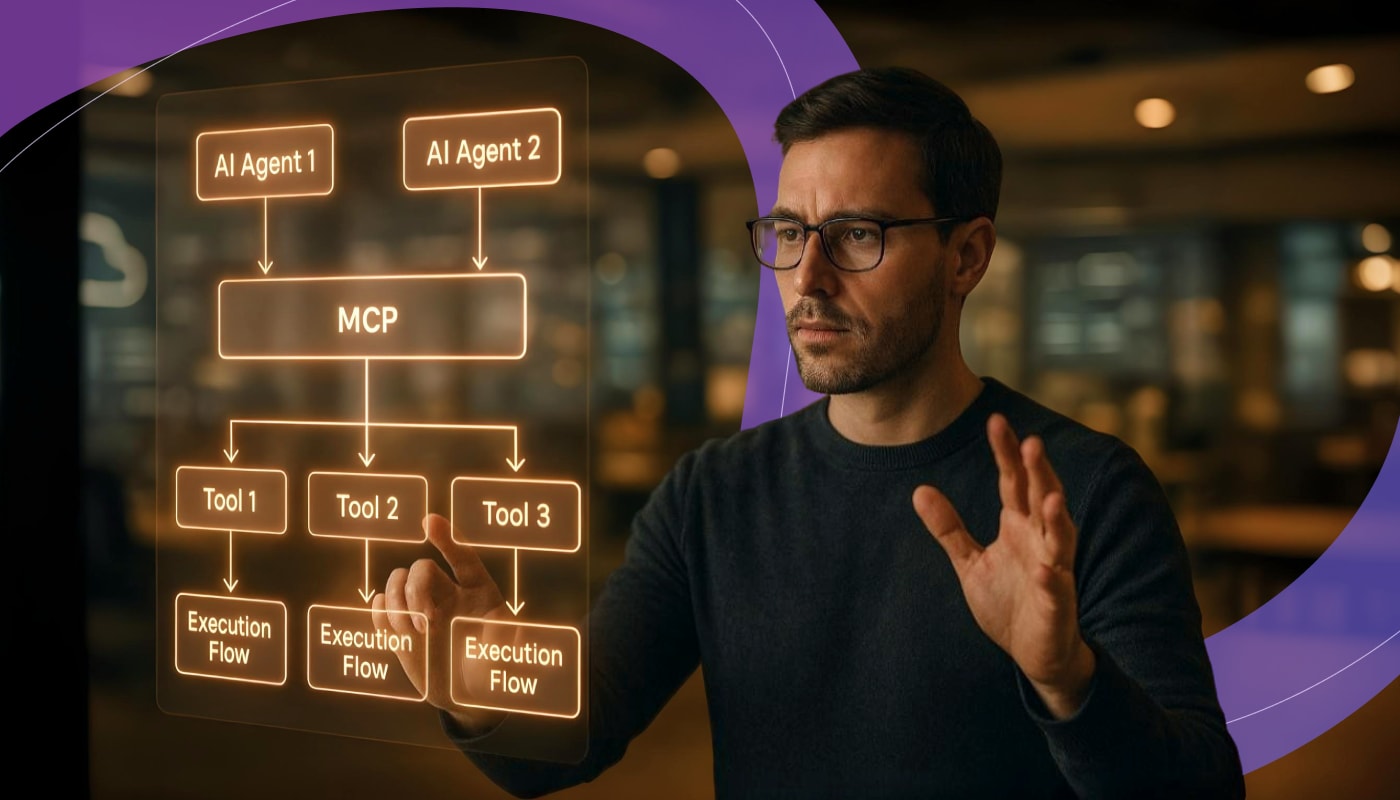

1. Central Execution and Orchestration Layer

Scaling requires a unified layer where business logic, system actions, and AI reasoning converge. It’s not enough to invoke LLMs at the edge. Enterprises need a way to coordinate actions across agents, users, and backend systems with clear control over state, sequence, and outcomes.

Whether the goal is automating product onboarding or enabling an agent to respond to a customer request in real time, orchestration ensures that the right services, prompts, and decisions happen in the right order. This is the difference between an AI that can generate text and one that can execute a process.

2. Coordinated Agent Logic with Fallback Paths and Decision Trees

As AI agents take on more responsibility, it's essential that they behave predictably even when the model response is unclear or incomplete. This means defining fallback logic, branching paths, escalation points, and guardrails as part of the agent’s orchestration that are not buried inside a prompt.

We’re already seeing teams structure agents with role-based flows: an inventory agent that checks multiple systems before escalating to a human, or a policy analyzer that shifts behavior based on regulatory zone. These are no longer “prompt flows”, they’re multi-step systems that must be modeled and governed.

This kind of structured agent behavior is central to how we approach empowered AI agents for enterprise use, where execution is just as important as generation.

3. Composable Data Pipelines and Contextual Awareness

Agents are only as good as the context they operate in. For enterprise use cases, that context is both dynamic and distributed, spanning customer preferences, order histories, product details, pricing rules, or compliance flags.

To scale effectively, enterprises need composable pipelines that feed relevant, permission-aware context into each AI flow. This includes data transformations, filtering, enrichment, and caching that are built for reuse, not hardcoded per use case.

A Gen AI system that can summarize a ticket is useful. One that can summarize a ticket with live SLA context, escalate to a system based on current backlog, and align its language to brand tone is far more valuable, and far harder to build without this foundation.

4. Governance Built In: Access, Observability, and Traceability

Scalable AI must be observable and governable from day one. This means knowing which agents are active, which decisions they’ve made, and what data they accessed. It means being able to trace outputs to inputs, version prompts and flows, and enforce access policies across users and services.

Without this level of visibility, enterprises can’t meet compliance requirements, conduct audits, or safely deploy agents into sensitive domains. Governance should not be layered on later, it should be embedded into the platform’s core.

5. A Platform That Treats AI as Part of the Logic Fabric

At Rierino, we think of the AI layer as part of the enterprise logic fabric, not a separate layer or integration. In this view, LLMs are not the product; they’re just one type of skill, invoked and coordinated like any other service.

The platform owns the flow, the structure, and the guardrails. This enables developers and enterprise teams to compose and scale AI capabilities as part of a shared orchestration model, where everything lives in one place. We explored this further in the Low-Code Platform Guide 2025, which breaks down how modern platforms are evolving beyond UI builders to become composable, AI-ready execution engines.

Checklist: Core Capabilities for Scalable Gen AI Architecture

| Capability | Why It Matters | What to Look For |

|---|---|---|

| Execution-Oriented Orchestration | AI must go beyond outputs to trigger actions and coordinate logic | Support for stateful flows, retries, sagas, and decision trees |

| Structured Agent Logic | Ensures reliable behavior, even when model responses vary | Branching logic, fallback handling, escalation, timeouts |

| Composable Data Pipelines | Dynamic context is essential for relevant, real-time AI | Connectors to live systems, enrichment, filtering, caching layers |

| Embedded Governance | Enables auditability, access control, and risk management | Built-in observability, prompt versioning, activity logs |

| Platform-Centric AI Integration | Treats LLMs as system actors, not external add-ons | Native agent invocation, low-code flow editing, integration with business logic |

Beyond Tools: Strategic Enablers for Gen AI at Scale

Even with the right architecture in place, scaling Gen AI depends just as much on how teams, processes, and strategy are aligned around that architecture. Without these organizational foundations, even the best-designed platforms will face internal friction, siloed execution, or stalled adoption.

Centralized vs. Embedded Enablement: Getting the Org Model Right

Many enterprises start with centralized Gen AI Centers of Excellence (CoEs), which can accelerate early momentum and provide shared expertise. But as AI capabilities mature, the CoE model often becomes a bottleneck. Teams wait in line for support, while expertise and context get concentrated in a small group.

An emerging pattern is embedded enablement: creating reusable logic and orchestration models centrally, but empowering individual teams to own and scale their use cases autonomously. This hybrid model combines platform stewardship with distributed execution, helping AI scale with the organization rather than ahead of it.

Aligning Talent Across Technical Domains

As AI becomes operational, different technical domains start overlapping. LLMOps (focused on model tuning, prompt engineering, and context injection) now intersects with MLOps (model lifecycle management) and DevOps (infrastructure and orchestration).

Without alignment, Gen AI projects can suffer from unclear ownership or conflicting implementation strategies. For instance, a dev team may deploy agents through a low-code orchestration tool, while the data science team manages prompts manually, leading to version drift, duplicated logic, or inconsistent behaviors across environments.

Establishing shared patterns, handoffs, and observability across these teams is essential for scale. Execution-first platforms can help here by creating a common coordination layer, making it easier for different teams to contribute to and monitor AI workflows.

Clarifying Strategy and Ownership Beyond Initial Success

While pilot teams often run fast and independently, scaling requires clearly defined ownership over AI strategy, tooling, and roadmap. Without this, organizations risk fragmented investment, duplicated infrastructure, or platform lock-in decisions made without alignment.

Some enterprises place AI under innovation, others under data or engineering, but when there's no coordinated ownership of orchestration, logic reuse, or platform enablement, momentum slows. A well-aligned strategy includes not just a long-term vision for AI, but clarity on how teams will coordinate, who manages what, and how capabilities are tracked and evolved over time.

Platform thinking helps here by providing a shared foundation, but it's the strategic clarity that ensures that foundation gets used effectively across domains.

Standardizing Process and Reuse Across Use Cases

Early pilots often develop bespoke workflows, but this approach doesn’t scale. Over time, enterprises need repeatable frameworks: design patterns for agents, reusable data connectors, and shared escalation paths.

For example, a product enrichment agent and a compliance validation agent may use similar input-handling logic, even though they serve different teams. Or a customer-facing bot may share fallback routing with an internal workflow agent. Codifying these patterns prevents duplication and ensures consistent behavior across use cases.

This is where platform thinking matters most: by treating orchestration, governance, and reuse as shared assets, and not one-off implementations, it becomes easier to scale Gen AI across teams and domains without reinventing the logic each time.

Checklist: Organizational Enablers for Scalable Gen AI

| Capability | Why It Matters | What to Look For |

|---|---|---|

| Organization Model | Centralized teams can drive early success but may hinder scale | Teams can build on shared assets without centralized bottlenecks |

| Tech Talent Alignment | DevOps, MLOps, and LLMOps often operate in silos | Defined ownership and collaboration across AI lifecycle roles |

| Strategy and Ownership | Scaling efforts stall without alignment on roadmap and responsibilities | Clear platform ownership, roadmap visibility, cross-functional governance |

| Process Reuse and Standardization | Without repeatable patterns, teams duplicate logic and effort | Shared orchestration templates, reusable agent patterns, centralized design guidance |

| Execution Visibility | Leaders need oversight into how and where AI is used | Unified dashboards or orchestration layers showing agent activity and flow state |

From Pilots to Platforms: The Real Shift Behind Scalable Gen AI

Many enterprises have made progress with generative AI pilots, but moving beyond that stage requires more than model access or experimentation. What differentiates organizations that scale successfully isn’t just their AI stack, but how they structure their systems and workflows around it. The shift isn’t from one tool to another. It’s from tool-first thinking to platform thinking.

Tool-first initiatives often focus on solving a specific problem in isolation—a content generator, a chatbot, a summarizer. While these may prove the value of AI, they rarely scale across teams or domains because they lack shared logic, orchestration, and governance. Each use case becomes a one-off project, with its own integrations and rules.

Platform thinking, by contrast, starts with orchestration. It means designing systems where AI agents operate within a structured execution layer, one that defines how actions are triggered, data is handled, and logic is reused across contexts. This kind of foundation doesn’t just support more use cases, it accelerates them.

At Rierino, we see this as the core enabler of scalable Gen AI. By embedding agents into backend workflows, coordinating their actions with composable logic, and managing them through a governed, low-code orchestration layer, we help enterprises go from isolated pilots to system-wide adoption. Not by making AI more visible, but by making it operational.

Ready to move beyond Gen AI pilots? Get in touch to see how Rierino helps enterprises operationalize AI with structured orchestration, agent coordination, and low-code backend logic—or start exploring on AWS.

RELATED RESOURCES

Check out more of our related insights and news.

FAQs

Your top questions, answered. Need more details?

Our team is always here to help.