Artificial intelligence is entering a new phase defined not just by intelligence, but by autonomy. From marketing assistants that launch personalized campaigns to supply chain bots that reorder inventory on their own, the rise of agentic AI marks a turning point in how software operates. Yet as these systems gain more independence, the need for governance and guardrails becomes exponentially more critical.

According to McKinsey, just 1% of surveyed organizations believe that their AI adoption has reached full maturity. While many enterprises are experimenting with autonomous agents and generative models, few have established the safety, accountability, and alignment mechanisms required to deploy them responsibly at scale. The challenge isn’t just about building smarter agents; it’s about ensuring those agents act within trusted, observable boundaries.

The Institute for AI Policy and Strategy’s recent field guide highlights this emerging tension: as decision-making moves from algorithms to autonomous agents, governance must evolve from reactive oversight to proactive orchestration. In other words, it’s no longer enough to manage data and models, organizations must now manage AI behavior.

At Rierino, we’ve seen this shift firsthand through enterprises scaling their generative and agentic AI initiatives from pilots to production. One insight stands out: the more powerful and connected AI agents become, the more essential governed orchestration is to balance autonomy with control.

This article explores what effective AI agent governance looks like — the risks it mitigates, the layers that make it work, and how orchestration-first low-code architecture enables trust, transparency, and security in the age of autonomous systems.

Why Governance Matters More for Agentic AI

The concept of AI governance isn’t new — most organizations already apply policies around data use, model validation, and compliance. But agentic AI changes the scope of what must be governed. Traditional systems make predictions or recommendations; agents go a step further by initiating and executing actions in live environments. That shift from decision to autonomous execution fundamentally transforms the governance challenge.

In classical AI, risk management focuses on how a model behaves. With agentic systems, it’s equally about what the model can do — and under what circumstances.

Each agent’s capacity to perceive, decide, and act introduces new layers of operational risk that data ethics frameworks or model fairness audits alone cannot address. This new paradigm requires oversight across three fronts:

- Action Control: ensuring agents can act only within predefined scopes and policies.

- Inter-Agent Coordination: managing how multiple agents interact or collaborate to prevent conflict or duplication.

- Continuous Accountability: maintaining full traceability over every decision, event, and action for audit and compliance.

As enterprises move beyond proof-of-concept deployments into production-scale systems, the absence of such controls can quickly lead to unintended automation, data exposure, or decision drift, issues that traditional governance frameworks were never designed to detect in real time.

Agentic AI, in other words, doesn’t just need smarter governance; it needs dynamic, execution-aware governance that can monitor, interpret, and intervene in the flow of autonomous operations as they unfold.

Top Governance Challenges for Autonomous Agents

Despite growing interest in agentic AI, most enterprises still underestimate the complexity of governing autonomy. The very qualities that make agents valuable, independence, adaptability, and collaboration, also introduce new and often invisible risks. Below are five of the most critical governance challenges organizations face as they move from experimentation to large-scale deployment.

1. Unbounded Autonomy: Without defined boundaries, autonomous agents may take actions that exceed their intended authority or cascade across systems. For example, a logistics optimization agent might reroute shipments to improve efficiency but inadvertently disrupt existing service-level agreements or inventory priorities. This challenge arises when autonomy is granted without clearly codified limits. Agents may operate with good intent but without awareness of strategic, ethical, or operational constraints.

2. Opaque Decision Flows: As agents self-adapt or collaborate, their internal decision logic can become difficult to trace or explain. In practice, this means a business cannot easily determine why a specific action occurred, what data triggered it, or whether it aligned with compliance policies. When decision paths lack transparency, accountability mechanisms fail, leaving enterprises unable to reconstruct cause-and-effect relationships during audits or incident analysis.

3. Runtime Drift: Over time, agents may diverge from their initial parameters as they learn from evolving data and context. A recommendation agent, for instance, might start prioritizing outcomes that maximize short-term engagement over long-term customer value if its reward signals shift subtly. Governance frameworks must therefore include continuous validation and feedback loops to detect behavioral drift before it compounds into systemic bias or unintended automation.

4. Coordination Conflicts: When multiple agents operate within shared environments, their independent actions can overlap or contradict one another. A pricing agent might apply a discount while a revenue management agent simultaneously adjusts base prices, both rational individually but conflicting collectively. These conflicts reveal the need for orchestration and dependency management, ensuring that agents don’t act in isolation but within an agreed-upon coordination protocol.

5. Auditability and Accountability Gaps: Traditional monitoring systems were not designed for real-time, autonomous decision chains. Without detailed logs of every decision and action, enterprises risk compliance blind spots, especially in regulated sectors like banking, telecom, or government. True accountability requires fine-grained observability: a continuous record of inputs, outputs, and actions that can be queried or rolled back at any point in time.

6. Resource and Cost Inefficiency: Unsupervised agents can also introduce hidden operational costs when allowed to execute actions or queries without optimization. Excessive API calls, non-strategic task repetition, or uncontrolled use of large language model tokens can quickly inflate spend without delivering proportional value. For instance, a content generation agent might consume thousands of unnecessary tokens refining an output that never reaches production. Effective governance must therefore extend to resource orchestration and budget control, ensuring that autonomy remains economically as well as operationally sustainable.

Agentic AI requires governance that extends beyond model oversight to execution-time control — the ability to monitor, interpret, and intervene in agentic behavior as it unfolds. This shift sets the foundation for the next layer: designing effective, multi-tiered governance frameworks that balance autonomy with accountability.

What Effective AI Agent Governance Looks Like

Effective governance for agentic AI isn’t about limiting innovation, it’s about shaping autonomy within safe, observable boundaries. As enterprises move from experimentation to scaled deployment, governance must evolve into a multi-layered system that defines rules, enforces them during execution, and ensures continuous accountability afterward.

An emerging best practice is to view AI agent governance across three complementary layers: Policy, Platform, and Oversight. Each plays a distinct role in ensuring that autonomy remains productive, compliant, and aligned with human intent.

1. Policy Layer: Defining the Rules of Autonomy

The foundation of any governance model is a clear, codified policy framework. This layer sets the limits of autonomy, outlining what an agent can do, under what conditions, and with what access rights. For example, in a government services environment, an AI intake agent may automatically classify and route citizen requests but must flag any case that involves sensitive personal data for manual verification.

This layer acts as the blueprint for accountability. When implemented through composable low-code architectures, these rules can be versioned, reused, and adapted across teams, ensuring consistent governance as agents evolve.

2. Platform Layer: Enforcing Guardrails at Runtime

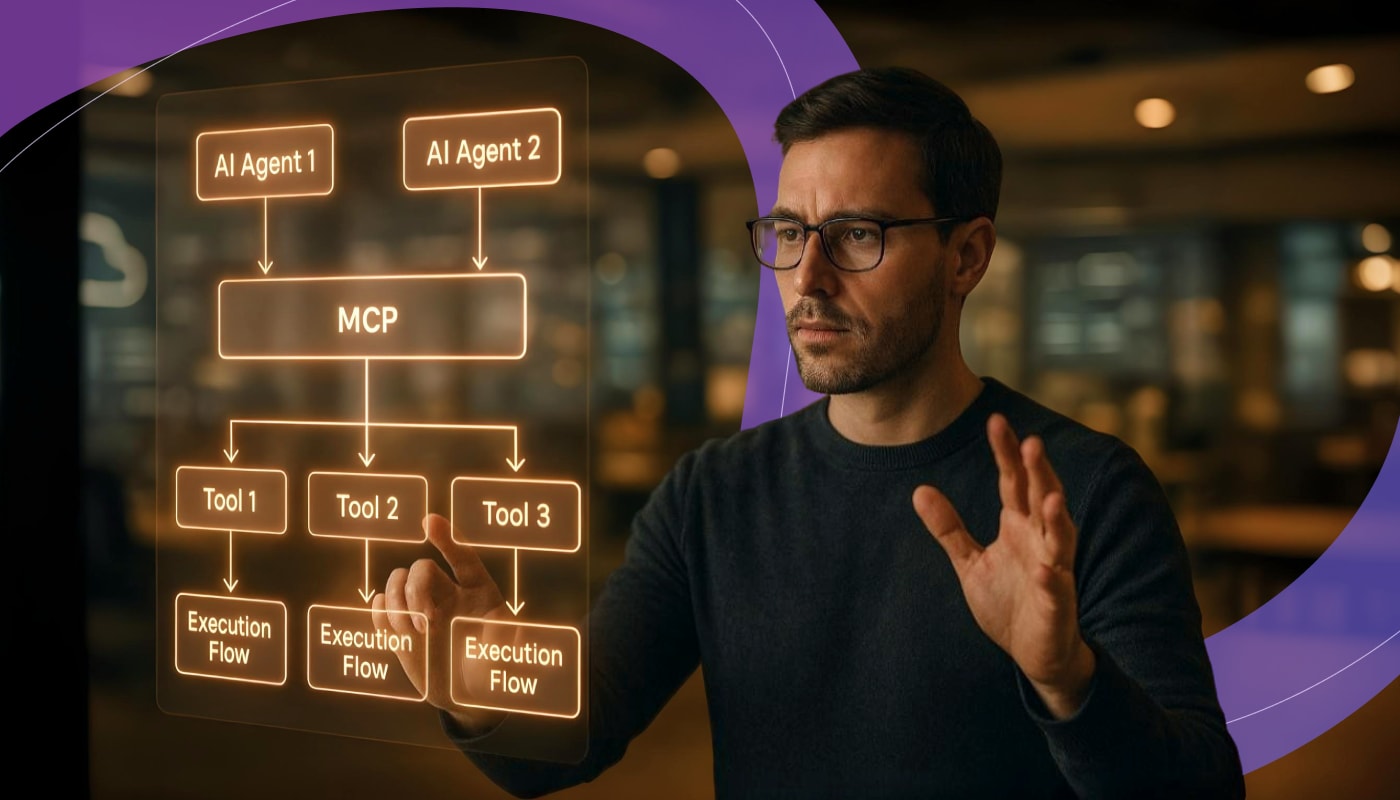

Even the best policies mean little if they can’t be enforced dynamically. The platform layer ensures that rules set at design time translate into real-time control and observability during execution. This is where orchestration becomes essential.

Through centralized execution flows, enterprises can monitor agent actions as they happen, validate them against defined policies, and trigger fallback or escalation mechanisms when anomalies occur. As an example, in financial operations, an agent responsible for reconciling cross-border transactions may autonomously process low-value payments but must request human validation for high-value or cross-jurisdiction transfers.

This layer embodies what we’ve previously described as an execution-first architecture that merges low-code flexibility with developer-level control. It’s the runtime heart of governed orchestration, where compliance, reliability, and security intersect.

3. Oversight Layer: Ensuring Continuous Accountability

The oversight layer focuses on transparency and trust after the fact. Every agentic action should leave a verifiable trace i.e. a record of decisions, inputs, outputs, and contextual triggers.

Audit trails, event logs, and explainable traces provide both operational insight and regulatory assurance. In regulated sectors, this isn’t optional; it’s how organizations demonstrate ethical AI operation and compliance at scale. In retail environments, an assortment-planning agent might autonomously adjust product visibility on digital shelves, while oversight dashboards record every parameter change, enabling teams to trace outcomes back to specific triggers and data conditions.

As seen in the evolution of agentic commerce systems, continuous observability bridges human oversight and machine autonomy. It’s not about slowing down agents, it’s about ensuring they remain explainable, reversible, and trustworthy.

Together, these three layers form the backbone of effective AI agent governance:

- Policy defines boundaries.

- Platform enforces behavior in real time.

- Oversight ensures transparency and accountability.

This multi-layered approach transforms governance from a compliance checkbox into an adaptive framework capable of supporting the next generation of AI systems that act, learn, and evolve across interconnected domains.

Orchestrated Low Code for Accountable AI

Achieving governance in agentic systems requires more than policy frameworks — it depends on the architecture that executes them. Traditional low-code platforms often focus on front-end workflows or process automation, leaving governance to be handled manually or through isolated middleware. But when AI agents operate autonomously across distributed systems, governance must be embedded within the orchestration layer itself.

An orchestrated approach to low code makes this possible. By coupling visual development with backend control, enterprises can model agentic logic, define guardrails, and manage execution within a single governed environment. The result is a system where autonomy can scale responsibly, and every action remains observable, reversible, and accountable.

1. Developer-Controlled Autonomy

In orchestrated low-code environments, developers can design agentic workflows with embedded conditions, exception handling, and escalation rules — all visually, yet with the same precision as hand-coded systems. This ensures autonomy remains bounded by transparent logic and policy. For example, a healthcare platform may use an intake agent that triages patient requests automatically but follows built-in approval steps before accessing personal data or suggesting any medical action.

At Rierino, we approach low code as a way to model governance directly within orchestration flows, so every autonomous decision remains traceable, explainable, and adaptable to changing policy requirements.

2. Secure Execution Layer

Governance becomes real at runtime. Instead of static documentation, orchestrated low-code systems enforce rules dynamically, validating every event, action, and system call before completion. In financial operations, for instance, an agent may autonomously match transactions but must trigger a verification workflow before confirming high-value payments.

Our execution framework is designed around these principles: governance, orchestration, and security operating together in a single execution-first architecture. This ensures that autonomy never bypasses compliance, even when decisions happen in milliseconds.

3. Built-in Observability and Saga Orchestration

Transparency forms the third pillar of accountable AI. Event-driven orchestration allows each agentic process to be monitored as a sequence of discrete, reversible steps. In omnichannel retail environments, if an inventory agent updates data across several systems and one update fails, the orchestration layer can automatically retry or roll back the changes to maintain consistency.

Through our saga orchestration tooling, we’ve seen how observability can evolve from a diagnostic tool into an active governance mechanism, giving enterprises not just visibility but real control over how autonomy unfolds in production.

4. Integration-First Governance

True governance also depends on how seamlessly agentic systems align with enterprise identity and compliance frameworks. Orchestrated low-code platforms can connect directly to existing IAM, monitoring, and data policies, ensuring agents inherit the same security and authorization context as human users. In a public sector platform, for instance, an autonomous case-handling agent can operate within defined access roles while still improving service throughput.

We’ve designed Rierino’s orchestration model to make governance an intrinsic property of system design, and not an external control layer. By embedding compliance logic into the orchestration fabric, enterprises can evolve their AI systems confidently, balancing innovation with accountability.

In our experience, orchestrated low code provides a sustainable path to accountable autonomy where systems act independently yet remain governed and explainable by design. This balance between freedom and control is not just a technical requirement but the foundation of enterprise trust in the age of agentic AI.

The Future of AI Agent Governance

As AI systems evolve from static models to autonomous agents, governance is no longer a matter of policy, it is a matter of architecture. Enterprise AI now operates in real-world contexts where agents can take initiative, make decisions, and trigger cascading actions across systems. Managing that level of autonomy requires more than oversight; it demands governed orchestration at the very core of execution.

The organizations that succeed with agentic AI will be those that treat governance not as a compliance requirement, but as a design principle. Policy frameworks must be codified into platform logic, decision flows must be observable in real time, and accountability must be continuous. Governance, in this sense, becomes an enabler of autonomy, allowing systems to act independently while still aligning with human, ethical, and operational intent.

At Rierino, we believe this balance defines the future of AI architecture. Our work with enterprises scaling from generative AI pilots to production-grade agent ecosystems has shown that orchestration-first low code is the bridge between innovation and control. By embedding policy, enforcement, and oversight directly into the execution layer, organizations can create systems that are not only faster and smarter, but safer and more accountable by design.

Ready to build trust in your AI systems? Get in touch to see how Rierino helps enterprises govern, orchestrate, and scale agentic AI with accountability at every layer.

RELATED RESOURCES

Check out more of our related insights and news.

FAQs

Your top questions, answered. Need more details?

Our team is always here to help.